Retrospective: Our platform operation in 2023 - many updates and a downgrade

A lot has happened since our last article in September. Ten releases were published by December and we expect two more by the end of the year. This means that we will have published a total of 34 releases on our platform this year.

At Flying Circus, we generally take the approach of applying patches to fix bugs as quickly as possible and continuously updating the basic distribution every fortnight. We provide major functional upgrades in a six-monthly release cycle by upgrading our platform to the latest available NixOS releases.

In the event of acute problems, e.g. in the form of particularly risky security vulnerabilities, we are of course also able to react more quickly and, in an emergency, get an available patch through our quality assurance team and into production environments within a few hours. In all of these situations, the assumption is that newer versions of software should tend to have fewer bugs than older versions. The vast majority of developers at NixOS, for example, go to great lengths to clearly separate feature and bug fix releases and use automated tests to keep quality very high despite fast release cycles.

In addition to the many continuous updates in our release process, however, there was one special feature this year that rarely occurs: In the case of our platform release on 13 November (2023_029), we decided to replace a kernel (Linux 6.1) that was already stable in use with an older kernel (Linux 5.15) again. Instead of the usual continuous updates, we have therefore carried out a downgrade.

How did this come about?

For about 8 months, our platform team has been investigating a phenomenon in which sporadic crashes of individual virtual machines have been observed.

Sporadic means here: 3 VMs each recorded a single crash over a period of 6 months, but the manner in which it occurred prompted us to observe and investigate the situation more closely. Initial results indicated that the crashes were due to a possible kernel bug. In dialogue with the Linux developers, we were quickly able to clarify that it was a previously unknown bug in the current kernel 6.1. The rarity with which this bug led to the crash of a virtual machine gives an idea of how low the probability is that this bug will lead to an actual crash.

At the same time, this bug is not reproducible for the kernel developers despite our support and detailed bug reports (and now also with Cloudflare, another affected company).

With the knowledge of a possible problem in the kernel currently used on our platform, we have been actively and closely monitoring the situation in recent months. In dialogue with the developers, we have repeatedly put forward new theories and continuously tried to create a reproducible state that triggers the error.

Thanks to intensive monitoring, our platform team was able to observe a change in the initial situation. A few weeks ago, we noticed a sudden increase in the number of affected systems - instead of individual VMs over several months, there was a strong accumulation of crashed VMs within a week. This accumulation has put our platform team into a different working mode to think about alternative strategies and rectify the situation.

Thanks to our intensive and lengthy dialogue with the developers, we have now been able to identify a subsystem in storage management as a possible cause. Extensive new features have been implemented there in the latest releases since kernel 5.17, which should improve the memory performance of many systems and generally also increase stability.

With this information, we now had a strong suspicion as to which kernel version or at what point in time the problem could have crept in. A downgrade to Linux kernel 5.15 was therefore the logical decision to counter the current problem.

What does this mean for the Flying Circus platform?

With the Linux kernel, stability is the top priority for developers. In some really rare cases, however, automated tests and test operation in staging environments are not sufficient to find such rare errors, as these are often dependent on very specific load behaviour, configurations and specific data on the hard disks and sometimes only occur after the server has been up and running for a long time.

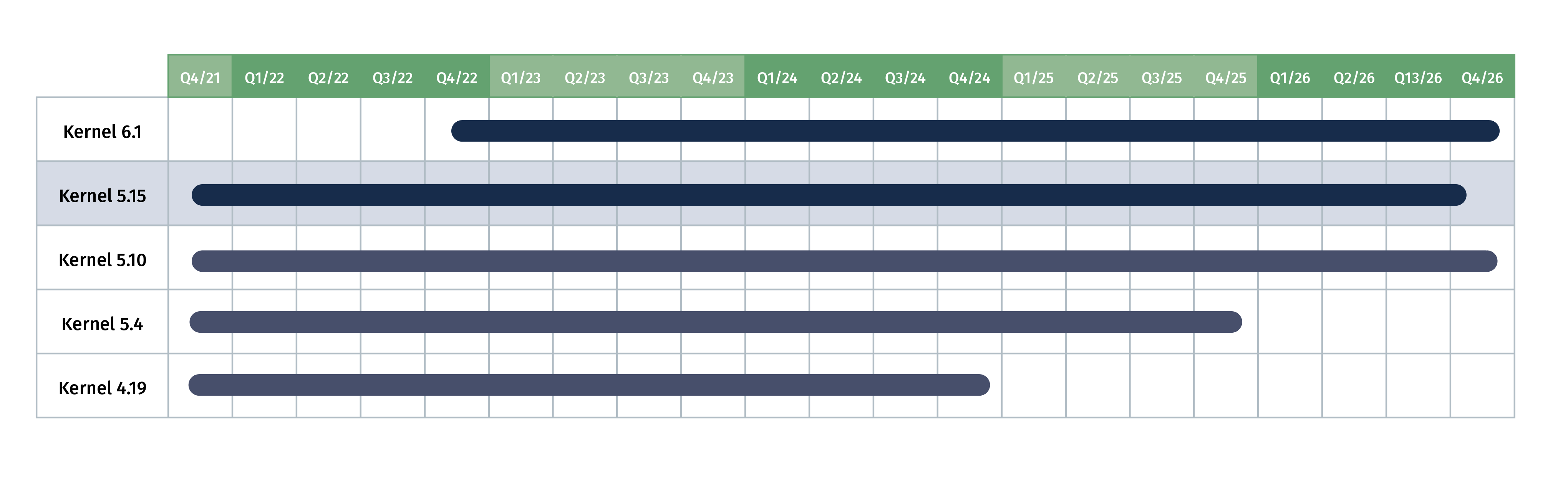

In order to be able to guarantee a high level of stability, especially of a basic component such as the Linux kernel, we choose so-called ‘long-term’ versions (LTS) as the version branch, which are only supplied with bug fixes over several years.

With the release of our platform based on NixOS 23.05, we changed the kernel. Since then, we have been using LTS version 6.1, which was released on 11 December 2022 and is also used as the standard version for NixOS. When changing the Linux kernel, we make sure that the development branch has already received a certain number of bug fix releases at this time. Kernel version 6.1 had already received 30 bugfix releases at this time. At the same time, it had already been extensively tested in the NixOS release process and had not caused any problems in our staging.

Does the Flying Circus platform with Linux 5.15 run a particularly old kernel? No! We have switched to an older LTS version branch (Linux 5.15 was originally released on 31 October 2021), but like kernel 6.1, this also receives a weekly bugfix release (and is now at 5.15.140). We have decided in favour of this kernel because the discussion with the kernel developers suggests that it is a subsystem that was revised in version 5.17 and therefore there is a very high probability that the problem we found does not exist in the 5.15 kernel branch. The kernels after Linux 5.17 seem to have a problem that causes crashes on Flying Circus and other infrastructure providers such as Cloudflare.

Is it even possible to update?

Yes, before the update to NixOS 23.05 we were running kernel 5.10, so in comparison we still offer a newer kernel than last year. In the course of time, with the information we have found and the ever progressing work of the community, this problem will also be solved.

The kernel we have currently chosen, 5.15, will continue to receive updates until the end of 2026, so we have no time pressure for the time being and will accompany a later change to kernel 6.1 (or perhaps an even newer kernel) with the question of whether this problem could be identified in the meantime or whether there are findings on the basis of which one can be sure that this problem has been solved.